Auto-Mount Remote Ubuntu HDD with SSHFS with Auto-Remount on Resume

If you're working across multiple Ubuntu machines—say, a lab desktop and a home desktop—you may want to mount a remote HDD over SSH so it behaves like a local drive. With sshfs, this is not only possible, but seamless. In this tutorial, we'll take it further and automate everything, including:

-

✅ Auto-mount on login

-

✅ Auto-unmount on logout

-

✅ Auto-unmount on suspend

-

✅ Auto-remount on resume

🧭 System Overview

| Machine | Role | OS Version | IP Address |

|---|---|---|---|

| Lab Desktop | SSHFS Host | Ubuntu 22.04 LTS | 100.111.75.24 |

| Home Desktop | SSHFS Client | Ubuntu 24.04 LTS | 100.76.123.70 |

We'll assume:

-

Your home machine username is

ehan -

Your lab machine username is

manik -

You're mounting the remote HDD located at:

/home/manik/8TB -

You want it mounted locally at:

/home/ehan/lab8tb

🧱 Step 1: Install sshfs on Your Home Desktop

sudo apt update

sudo apt install sshfs

🔐 Step 2: Set Up SSH Key Access to the Lab PC

Run the following from your home desktop:

ssh-keygen # press Enter through all prompts

ssh-copy-id [email protected]

Test it:

ssh [email protected]

✅ If you get in without a password, you’re good.

📁 Step 3: Create a Local Mount Point

mkdir -p ~/lab8tb

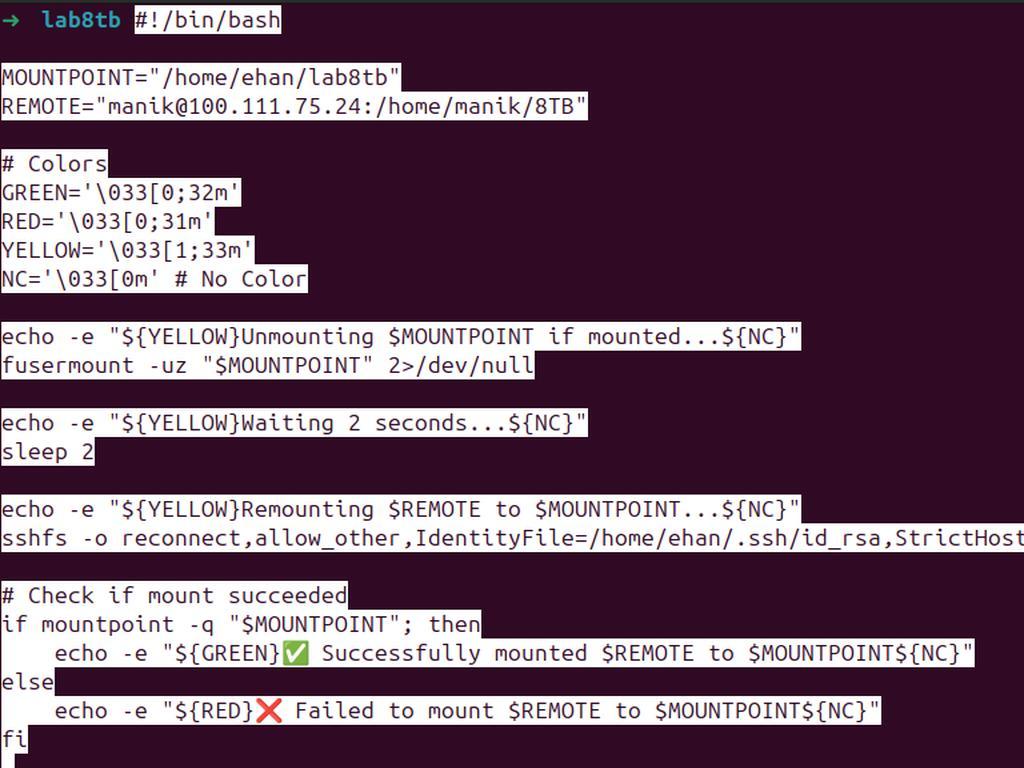

🛠️ Step 4: Write a Mount Script

Create the script:

mkdir -p ~/.local/bin

nano ~/.local/bin/mount-lab8tb.sh

Paste this:

#!/bin/bash

MOUNTPOINT="/home/ehan/lab8tb"

REMOTE="[email protected]:/home/manik/8TB"

REMOTE_HOST="100.111.75.24"

# Color output

GREEN='\033[0;32m'

RED='\033[0;31m'

YELLOW='\033[1;33m'

NC='\033[0m'

# Ping test

ping -c 1 -W 2 "$REMOTE_HOST" > /dev/null

if [ $? -ne 0 ]; then

echo -e "${RED}❌ Remote host not reachable. Skipping mount.${NC}"

exit 1

fi

echo -e "${YELLOW}Unmounting $MOUNTPOINT if mounted...${NC}"

fusermount -uz "$MOUNTPOINT" 2>/dev/null

sleep 2

echo -e "${YELLOW}Mounting $REMOTE to $MOUNTPOINT...${NC}"

sshfs -o reconnect,allow_other,IdentityFile=/home/ehan/.ssh/id_rsa,StrictHostKeyChecking=no,UserKnownHostsFile=/dev/null,ServerAliveInterval=15,ServerAliveCountMax=3 "$REMOTE" "$MOUNTPOINT"

if mountpoint -q "$MOUNTPOINT"; then

echo -e "${GREEN}✅ Successfully mounted $REMOTE to $MOUNTPOINT${NC}"

else

echo -e "${RED}❌ Failed to mount $REMOTE to $MOUNTPOINT${NC}"

fi

Make it executable:

chmod +x ~/.local/bin/mount-lab8tb.sh

⚙️ Step 5: Create a systemd User Service for Auto-Mount

mkdir -p ~/.config/systemd/user

nano ~/.config/systemd/user/mount-lab8tb.service

Paste:

[Unit]

Description=Mount lab 8TB via SSHFS

After=network-online.target

Wants=network-online.target

[Service]

Type=oneshot

ExecStart=/home/ehan/.local/bin/mount-lab8tb.sh

ExecStop=/bin/fusermount -uz /home/ehan/lab8tb

RemainAfterExit=true

TimeoutSec=30

[Install]

WantedBy=default.target

Enable it:

systemctl --user daemon-reload

systemctl --user enable mount-lab8tb.service

Also enable lingering so it runs outside GUI sessions:

loginctl enable-linger ehan

💤 Step 6: Auto-Unmount Before Suspend

nano ~/.config/systemd/user/unmount-lab8tb-on-suspend.service

Paste:

[Unit]

Description=Unmount lab8tb on suspend

Before=sleep.target

[Service]

Type=oneshot

ExecStart=/bin/fusermount -uz /home/ehan/lab8tb

[Install]

WantedBy=sleep.target

Enable it:

systemctl --user enable unmount-lab8tb-on-suspend.service

🔄 Step 7: Auto-Remount After Resume

nano ~/.config/systemd/user/remount-lab8tb-on-resume.service

Paste:

[Unit]

Description=Remount lab8tb on resume

After=suspend.target

[Service]

Type=oneshot

ExecStart=/home/ehan/.local/bin/mount-lab8tb.sh

[Install]

WantedBy=suspend.target

Enable it:

systemctl --user enable remount-lab8tb-on-resume.service

🧪 Step 8: Testing

Test mount manually:

systemctl --user start mount-lab8tb.service

Test unmount manually:

systemctl --user stop mount-lab8tb.service

Simulate resume:

systemctl --user start remount-lab8tb-on-resume.service

🎉 That’s It!

You now have a complete, automated SSHFS setup that:

-

Mounts your lab HDD when you log in

-

Unmounts cleanly on logout or suspend

-

Remounts automatically after waking up

This gives you a low-friction workflow for sharing storage across Linux machines over the network.

Let me know if you'd like to add:

-

🔒 Auto-lock if mount fails

-

📬 Desktop notifications on mount/unmount

-

📦 Mount multiple drives (e.g., backup, scratch)

Sure! Here's a complete blog post that explains the entire process of automating SSHFS drive mounting, including how to set up the mount_drives.sh script, and automate it using various methods like systemd and crontab.

How to Automate SSHFS Drive Mounting After Reboot on Linux

When working with remote servers or networked file systems, it's common to mount remote directories onto a local machine. One way of doing this is by using SSHFS (SSH File System), which allows you to mount a remote directory over SSH. This is perfect for accessing files on a remote server just as if they were local.

In this blog post, we’ll explain how to mount multiple SSHFS drives on Linux and automate the process so they are remounted automatically after a reboot or shutdown. We’ll cover the following:

-

Creating the

mount_drives.shScript -

Automating with

systemd -

Automating with

crontab -

Understanding the Script and Methods

Step 1: Create the mount_drives.sh Script

The first step is creating a bash script that will mount the remote SSHFS drives. Below is the script that handles both mounting and unmounting of the drives:

#!/bin/bash

# Define mount points and remote locations

MOUNTPOINT1="/home/ehan/lab8tb"

MOUNTPOINT2="/home/ehan/LAB2TB"

REMOTE1="[email protected]:/home/manik/8TB"

REMOTE2="[email protected]:/home/manik/1.8TB"

REMOTE_HOST="100.111.75.24"

# Color output

GREEN='\033[0;32m'

RED='\033[0;31m'

YELLOW='\033[1;33m'

NC='\033[0m'

# Ping test

ping -c 1 -W 2 "$REMOTE_HOST" > /dev/null

if [ $? -ne 0 ]; then

echo -e "${RED}❌ Remote host not reachable. Skipping mount.${NC}"

exit 1

fi

# Unmount if already mounted

echo -e "${YELLOW}Unmounting $MOUNTPOINT1 if mounted...${NC}"

fusermount -uz "$MOUNTPOINT1" 2>/dev/null

echo -e "${YELLOW}Unmounting $MOUNTPOINT2 if mounted...${NC}"

fusermount -uz "$MOUNTPOINT2" 2>/dev/null

sleep 2

# Mount the first drive

echo -e "${YELLOW}Mounting $REMOTE1 to $MOUNTPOINT1...${NC}"

sshfs -o reconnect,allow_other,IdentityFile=/home/ehan/.ssh/id_rsa,StrictHostKeyChecking=no,UserKnownHostsFile=/dev/null,ServerAliveInterval=15,ServerAliveCountMax=3 "$REMOTE1" "$MOUNTPOINT1"

# Check if first drive is mounted

if mountpoint -q "$MOUNTPOINT1"; then

echo -e "${GREEN}✅ Successfully mounted $REMOTE1 to $MOUNTPOINT1${NC}"

else

echo -e "${RED}❌ Failed to mount $REMOTE1 to $MOUNTPOINT1${NC}"

fi

# Mount the second drive

echo -e "${YELLOW}Mounting $REMOTE2 to $MOUNTPOINT2...${NC}"

sshfs -o reconnect,allow_other,IdentityFile=/home/ehan/.ssh/id_rsa,StrictHostKeyChecking=no,UserKnownHostsFile=/dev/null,ServerAliveInterval=15,ServerAliveCountMax=3 "$REMOTE2" "$MOUNTPOINT2"

# Check if second drive is mounted

if mountpoint -q "$MOUNTPOINT2"; then

echo -e "${GREEN}✅ Successfully mounted $REMOTE2 to $MOUNTPOINT2${NC}"

else

echo -e "${RED}❌ Failed to mount $REMOTE2 to $MOUNTPOINT2${NC}"

fi

Explanation of the Script

-

Variables:

-

MOUNTPOINT1andMOUNTPOINT2specify the local directories where the remote drives will be mounted. -

REMOTE1andREMOTE2define the remote directories on the server.

-

-

Ping Test:

-

The script first pings the remote server to ensure it’s reachable before trying to mount the drives. If the ping fails, the script exits.

-

-

Unmount Existing Mounts:

-

Before attempting to mount new drives, the script unmounts any existing ones using

fusermount.

-

-

Mounting with SSHFS:

-

The script uses

sshfsto mount the remote drives at the specified mount points. It includes options for reconnection and keeps the SSH session alive.

-

-

Checking for Success:

-

After each mount attempt, the script checks if the mount was successful using the

mountpoint -qcommand.

-

Step 2: Automating the Script with systemd

To ensure your drives are mounted automatically after a reboot, you can create a systemd service that runs the mount_drives.sh script on startup.

Create a systemd Service File

-

Create the service file:

sudo nano /etc/systemd/system/mount_drives.service -

Add the following content:

[Unit] Description=Mount SSHFS Drives After=network.target [Service] Type=simple ExecStart=/usr/bin/bash /usr/local/bin/mount_drives.sh Restart=on-failure [Install] WantedBy=multi-user.target -

Enable the service:

sudo systemctl daemon-reload sudo systemctl enable mount_drives.service -

Start the service immediately (for testing):

sudo systemctl start mount_drives.service

Now, your system will automatically mount the SSHFS drives on reboot. The After=network.target ensures that the system waits for the network to be up before attempting to mount.

Step 3: Automating the Script with crontab

If you prefer a simpler method to run the script at startup, you can use cron. The @reboot directive in crontab allows you to run a command automatically every time the system reboots.

Create a Cron Job

-

Edit the crontab:

sudo crontab -e -

Add the following line to the crontab:

@reboot /usr/bin/bash /usr/local/bin/mount_drives.sh -

Ensure the script is executable:

sudo chmod +x /usr/local/bin/mount_drives.sh -

Reboot your system to test:

sudo reboot

After rebooting, the cron job should automatically execute the script and mount the remote drives.

Step 4: Automating with /etc/fstab (Less Recommended for SSHFS)

The /etc/fstab file is typically used to configure local file systems to be mounted automatically at boot. However, because SSHFS depends on network connectivity, it's not ideal to use it for SSHFS mounts. That said, if you want to experiment with it, you can add the following lines to /etc/fstab:

[email protected]:/home/manik/8TB /home/ehan/lab8tb fuse.sshfs defaults,allow_other,IdentityFile=/home/ehan/.ssh/id_rsa,StrictHostKeyChecking=no,UserKnownHostsFile=/dev/null 0 0

[email protected]:/home/manik/1.8TB /home/ehan/LAB2TB fuse.sshfs defaults,allow_other,IdentityFile=/home/ehan/.ssh/id_rsa,StrictHostKeyChecking=no,UserKnownHostsFile=/dev/null 0 0

Note: This method is not recommended for SSHFS as it lacks network dependency checks. You may encounter issues if the network is unavailable during boot.

Conclusion

Automating SSHFS drive mounting ensures that your remote drives are always available after a reboot. While there are several methods to achieve this, systemd provides the most robust solution, especially when dealing with network dependencies. crontab offers a simpler, lightweight alternative, and /etc/fstab can be used but is less ideal for network mounts like SSHFS.

Choose the method that best fits your needs, and enjoy a seamless, automated file system experience!

Let me know if you need any further clarifications or help with setting it up!

Got it — if the systemd service approach isn’t reliable for your case (especially with user sessions, SSH keys, or GUI-related delays), then switching to a cron job is totally reasonable.

Here’s how to run your mount_drives.sh script on boot with a delay, using cron.

✅ Step-by-Step: Use crontab to Run on Boot

1. Edit your user’s crontab:

crontab -e

2. Add this line at the bottom:

@reboot sleep 30 && /usr/local/bin/mount_drives.sh

⏱️

sleep 30adds a 30-second delay after boot — adjust as needed.Make sure the script does not require

sudoto run (e.g.,fusermount,sshfs, and mount paths must be accessible as your normal user).

✅ Additional Setup

3. Make sure the script is executable:

sudo chmod +x /usr/local/bin/mount_drives.sh

4. Ensure FUSE allows non-root allow_other use

Make sure this line is uncommented in:

sudo nano /etc/fuse.conf

user_allow_other

✅ Optional: View Output Logs

Because cron doesn't show output in a terminal, modify the cron entry to log output:

@reboot sleep 30 && /usr/local/bin/mount_drives.sh >> /var/log/mount_drives.log 2>&1

This ensures you can check what happened:

cat /var/log/mount_drives.log

✅ Test Without Reboot (for debugging)

You can manually simulate the script:

/usr/local/bin/mount_drives.sh

Or simulate the cron environment:

env -i HOME=$HOME PATH=/usr/bin:/bin /usr/local/bin/mount_drives.sh

Let me know if you'd like to:

-

Use a GUI tool like

gnome-schedule -

Set it up only after login instead of boot

-

Add desktop notifications on success/fail

0% Positive Review (0 Comments)